MLflow 3.0 for GenAI: Traceability, Evaluation, and Scalability in One Platform

- Miguel Diaz

- Dec 30, 2025

- 05 Mins read

- AI

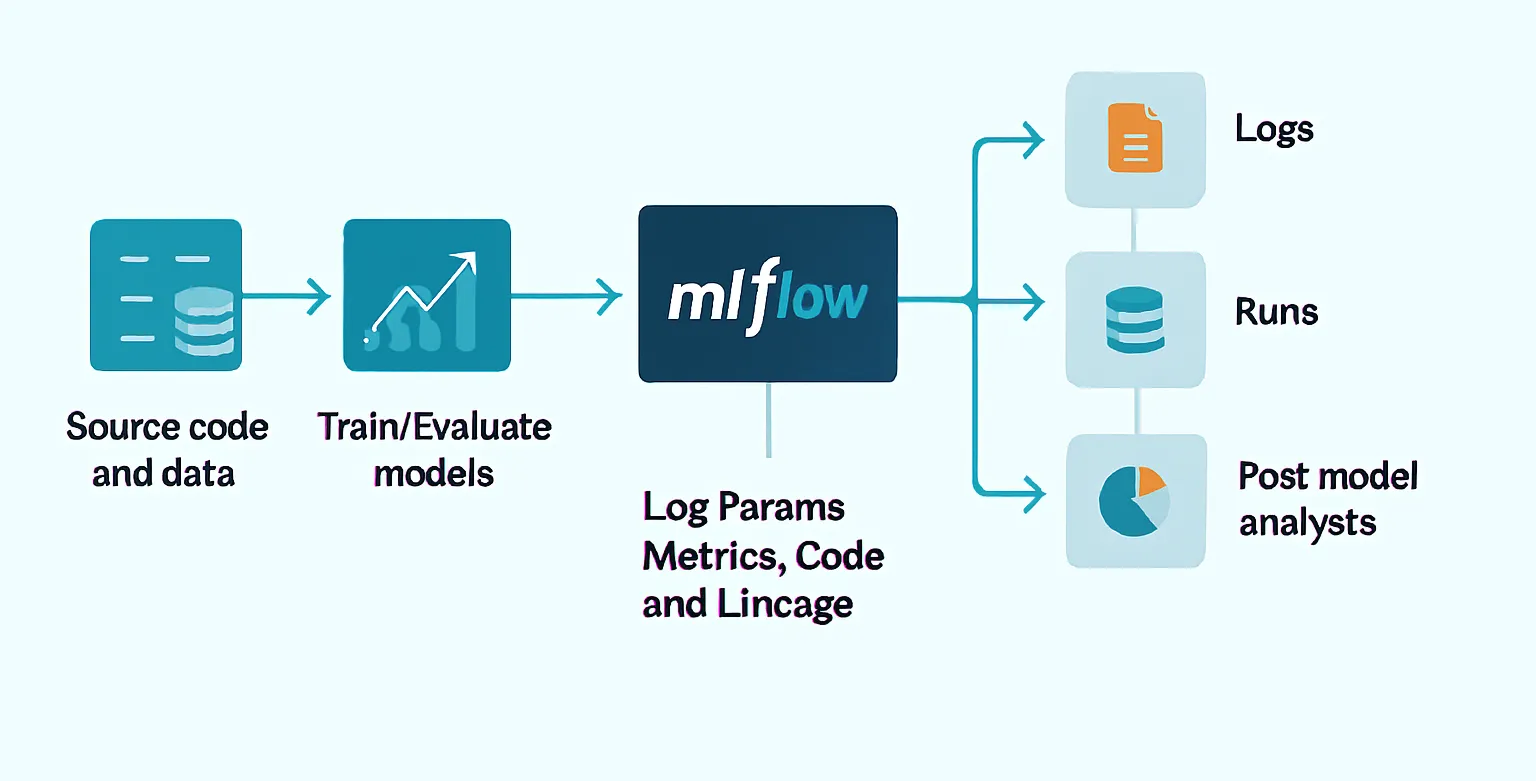

MLflow is an open-source platform designed to manage the end-to-end lifecycle of machine learning models and applications. Since its launch in 2018, it has become a standard in MLOps, enabling teams to log experiments, version artifacts, and reproduce deployments consistently.

The arrival of generative artificial intelligence (GenAI) has transformed this landscape. Systems no longer just predict: they now generate text, code, and complex content. This introduces new challenges that go beyond traditional MLOps: evaluating the quality of generated responses, versioning prompts, auditing LLM interactions, analyzing costs and latencies, and ensuring regulatory compliance in production environments.

MLflow 3.0 marked a turning point by introducing a foundation specifically designed for these challenges. Building on that, the latest stable release, MLflow 3.7.0, consolidates and matures these capabilities, providing robust tools for observability, evaluation, and governance of GenAI applications in production.

What is MLflow 3.0 and why is it key for GenAI?

MLflow 3 represents an evolution from the classic MLOps approach to a framework tailored for generative applications. This line introduces fundamental concepts that allow GenAI systems to be managed with the same rigor as traditional models, while considering their unique characteristics.

Its main pillars include:

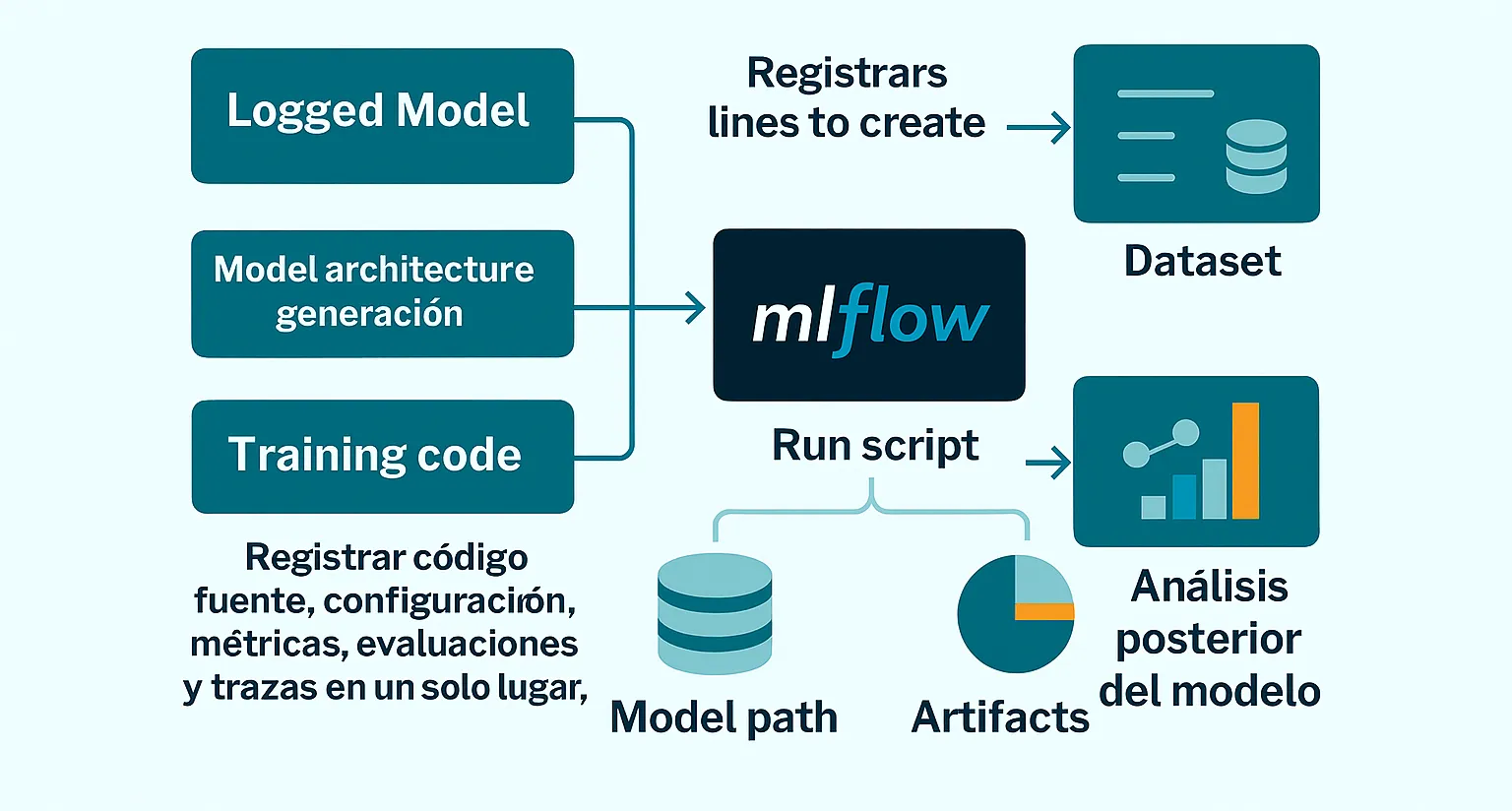

- LoggedModel as a first-class entity: the model is no longer just an artifact, but an object that groups source code, configuration, metrics, evaluations, and traces.

- Automatic traceability: systematic logging of prompts, responses, latencies, tokens, and costs for each execution.

- Native GenAI quality evaluation: automated metrics and judges to analyze relevance, correctness, safety, and hallucinations.

- Enterprise governance and compliance: integration with catalogs and access controls for auditing, versioning, and reproducibility.

This approach makes MLflow a unified platform where experimentation, monitoring, and operation of GenAI applications can be managed consistently.

Key Advantages

| Advantage | Description |

|---|---|

| Unified platform | A single environment from experimentation to production operation. |

| Total flexibility | Compatible with any LLM and frameworks like OpenAI, LangChain, LlamaIndex, or Anthropic. |

| Consistent metrics | What is measured in development is maintained in production. |

| Integrated human feedback | Real interactions become continuous improvement inputs. |

| Prompt versioning | Systematic management of prompts as versionable artifacts. |

| Security and compliance | Integrated governance, auditing, and access control. |

MLflow 3.7.0: maturity for GenAI applications in production

MLflow 3.7.0 is the latest stable release of the 3.x series. It does not introduce a new paradigm, but rather reinforces and expands the capabilities introduced in MLflow 3.0, focusing on the real challenges of operating GenAI at scale.

The most relevant improvements include:

Advanced prompt management

The experiments interface allows you to search, filter, and analyze registered prompts, making reviews, audits, and version comparisons easier without additional manual processes.

Multi-turn evaluation

MLflow expands its evaluation capabilities to support full conversations, enabling the analysis of conversational flows and agents that interact in multiple steps, rather than isolated responses.

Trace comparison

The trace comparison feature allows you to analyze executions side by side, identifying differences in behavior, quality, latency, or cost—key for detecting regressions before they impact the business.

Observability in complex flows

Traceability is improved in applications with agents, toolchains, and chained calls, providing a clear view of how each request flows through the system.

Governance and stability

Integrations with enterprise governance mechanisms are strengthened, improving auditability, access control, and operational stability in production environments.

Altogether, MLflow 3.7.0 is a mature release for teams needing to operate GenAI applications with high standards of quality and control.

Automatic traceability demonstration

Enabling traceability in MLflow 3.x does not require rewriting your application. Just activate autologging before running your code:

import mlflow

mlflow.autolog() # Enable automatic traceability

response = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": "Summarize the quarterly report."}]

)

print(response.choices[0].message)Automated Evaluation of GenAI Applications

Quality evaluation is one of the pillars of MLflow 3.7. This version introduces a comprehensive evaluation system that allows you to objectively, reproducibly, and continuously measure the performance of GenAI applications in any environment.

Detects and alerts about unsafe or sensitive content generated by the model, helping to prevent risks in production.

Hallucinations

Evaluates the fidelity and truthfulness of generated responses, identifying possible model inventions or errors.

Relevance and correctness

Verifies that responses are useful, accurate, and aligned with the user’s intent or use case.

Custom judges

Allows you to define evaluation criteria and metrics tailored to your business needs and objectives.

info

This replaces costly manual tests with reproducible and consistent evaluations.

Migration and Technical Considerations

MLflow 3.0 is not just an update: it’s a new way of working with GenAI models. The LoggedModel concept centralizes everything—code, configuration, metrics, evaluations, and traces—in one place, making auditing and reproducibility easier.

Additionally, with mlflow.autolog(), key information such as prompts, responses, latencies, and costs is automatically logged, both in development and production. This simplifies instrumentation and improves comparability across environments.

The diagram shows how these components integrate into a more structured flow, connecting code, data, and subsequent model analysis.

Featured Use Cases

Continuous optimization of conversational agents

Monitor prompts and responses in production to iteratively adjust and improve chatbot performance.

Regulatory compliance and auditing

Version models, metrics, and traces to meet regulations (HIPAA, ISO) and facilitate audits.

Production degradation detection

Identify drops in performance and response quality, allowing retraining before impacting the business.

Training with human feedback

Turn real interactions into test cases and training data for continuous improvement.

Conclusion

MLflow 3.0 laid the foundation for managing GenAI applications with rigor. Its evolution to MLflow 3.7.0 consolidates these capabilities and brings them to a level of maturity suitable for enterprise environments.

By integrating complete traceability, automated evaluation, and governance, MLflow offers a solid platform to scale GenAI applications with confidence, audit them precisely, and continuously improve them.

Adopting MLflow 3 is not just a technical decision: it’s a strategic bet on quality, control, and operational sustainability.

Resources

note

To learn more about MLflow 3 and its GenAI capabilities, check out the following official resources: